Let’s rewind to where this all began. When diffusion models first made text-to-image a reality, it felt like magic. You could type a sentence—and see it rendered into a fully original image in seconds. Then came video. Early text-to-video tools built on those same models, frame by frame, using AI to "dream" motion from static descriptions.

But it wasn’t perfect. The motion was floaty. Faces melted. Hands… well, we all remember the hands.

Still, the seed was planted. Suddenly, video creation wasn’t limited to people with cameras or editing skills. All you needed was a prompt.

In those early days, most text-to-video outputs were abstract, cinematic, or stylized. Great for concept art, mood boards, or sci-fi dreamscapes—not so much for a product demo or ad campaign.

But that’s changing fast.

In 2025, the capabilities of generative video tools have dramatically improved. It’s no longer just about generating beautiful scenes. It’s about coherence—motion that makes sense, characters that stay consistent, and facial expressions that match emotion.

Newer models can now:

The outputs aren’t just visually good—they’re increasingly emotionally legible. And that opens the door for something more powerful than pretty clips: conversation.

What we’re seeing now is a shift from diffusion to dialogue—from generating videos about something to generating videos that can say something.

Text-to-video models are starting to pair with large language models like GPT‑5. This means you’re not just getting video that moves—you’re getting video that communicates.

Imagine feeding in your product positioning, and the AI not only generates a video but also writes and delivers a relevant pitch through an avatar or voiceover. Now imagine that same avatar being able to answer questions, localize its message, or adjust tone for different audience segments.

That’s where we’re headed: generative video that’s not just reactive, but interactive.

It’s not far-fetched—it’s already happening in early form. And for marketers? That’s a game-changer.

Let’s face it—making good video content is still time-consuming. Even with AI tools, scripting, editing, and testing takes energy most teams don’t have.

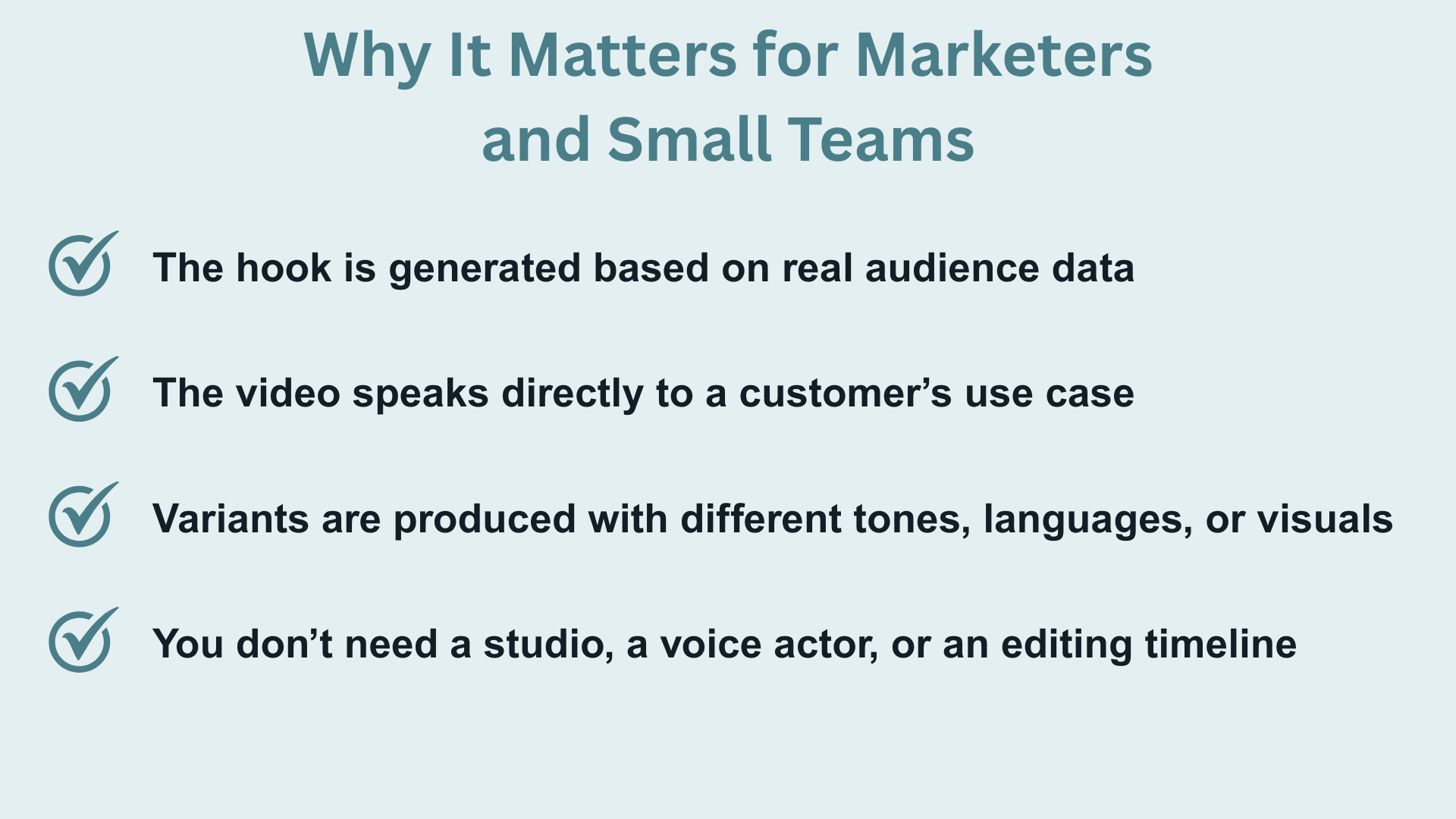

But imagine building an ad campaign where:

This is what the shift to dialogue-enabled AI video makes possible. Not just faster content—but smarter, more context-aware content that adapts to who’s watching.

It puts high-quality storytelling in the hands of solo creators, small businesses, and lean growth teams—without sacrificing clarity or creativity.

The evolution of text-to-video is moving at breakneck speed. Here’s what we’re likely to see soon:

Most importantly, generative video will move from "cool demo" to core workflow. It will become the way you concept, produce, and iterate ad creative—day to day.

At Clicks.Video, we’re focused on bringing the power of generative video to real marketing use cases—not just experiments.

While some tools aim for maximum realism or artistic output, we prioritize clarity, usability, and speed. You can drop in a product URL, generate video scripts and visual formats instantly, and test multiple hooks—all without sacrificing message quality or brand voice.

As the industry shifts toward more dialogue-driven video, Clicks.Video will continue integrating new models and formats—while keeping the workflow intuitive for non-technical users.

You won’t need to understand diffusion, LLM chaining, or prompt engineering. You just need to know your audience—and we’ll help you speak to them, fast.

What is diffusion in text-to-video AI?

Diffusion models generate video by gradually adding noise to an image and then removing it based on a prompt. It’s how early text-to-video tools created motion from still images.

What’s the difference between diffusion and dialogue-based AI video?

Diffusion focuses on visual generation. Dialogue-enabled tools combine visuals with language models—creating videos that speak, emote, or interact using dynamic scripts and voices.

How will GPT‑5 impact video generation?

GPT‑5 offers deeper reasoning, structured output, and plugin compatibility. This allows for better scriptwriting, more context-aware creative, and potentially real-time interactivity in video avatars.

Can small businesses actually use this tech?

Yes—and that’s the whole point. With platforms like Clicks.Video, even solo marketers can create high-converting video ads using nothing but text and a product link.

What’s next for AI video in marketing?

Expect faster production, more personalized video content, and creative automation that integrates directly into campaign workflows.